Companies spend 40-60% of revenue on payroll and some of this enormous expense is driven by management decisions about recruiting, promoting and training made on gut feel. That said, HR is an area of active innovation. The growing discipline of people analytics and improved technology are just some of the ways that the function is becoming data savvy and predictive.

My goal this month was to understand more about what AI is and how it will impact HR and the future of work. My takeaways:

- Robots are not going to take our jobs. At least not in the near future. The most compelling applications of AI currently enhance human work. Jobs and the skill sets needed to excel in this environment will likely change, with a focus on skills that humans already excel at, like thoughtful communication and making judgment calls with complex information.

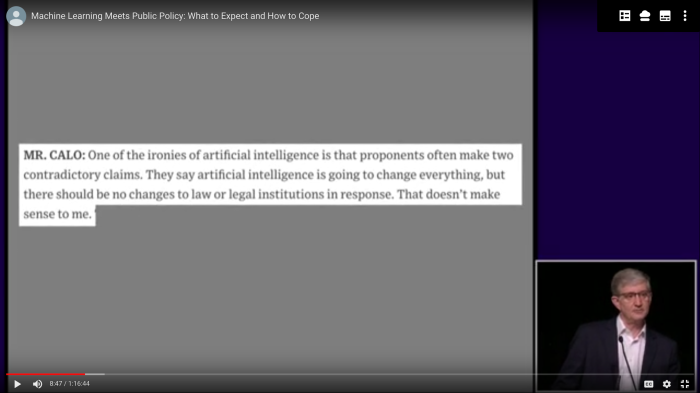

- The ethical implications of AI are a serious concern. There are many, many discussions on this topic but no clear guidelines have emerged.

- Regulation lags implementation. Similar to ethical concerns, it’s not yet clear how companies will be asked to stay compliant using AI tools.

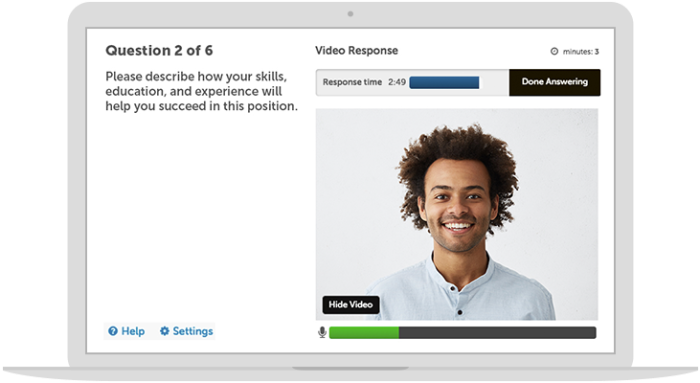

- Interacting with AI can be fun! Concerns that AI leads to poor user experience or an inhuman touch seem to be unfounded for the most part. While there are complaints about highly automated recruiting processes, many platforms provide transparency and feedback at a scale that isn’t possible for traditional recruiting organizations.

Thanks for engaging in #AIFebruary. It’s been fun to hear from people interested in the topic and always amazing to find kindred hobbyists on the internet. Please continue to reach out to me with questions and comments! I’ll continue to share my thoughts on the topic here and on Twitter.